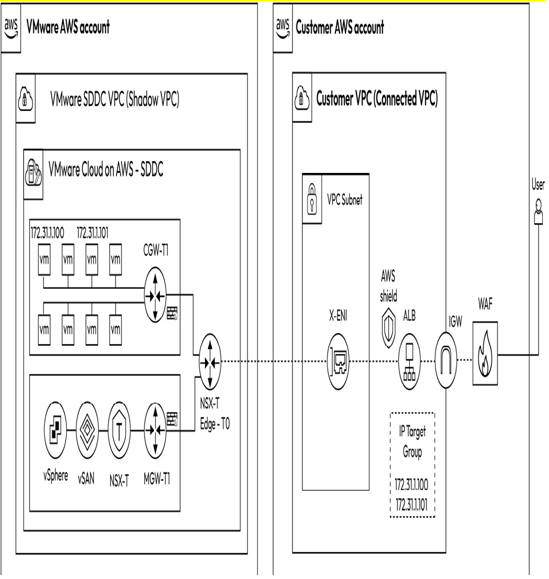

AWS Elastic Load Balancing (ELB) is a service that distributes incoming application traffic to multiple targets and virtual appliances automatically. The targets can be EC2 instances, IP addresses, or lambda functions. When integrating with workloads on VMware Cloud on AWS, the target type is always IP addresses. The Application Load Balancer (ALB) is used for load-balancing HTTP requests, while the Network Load Balancer (NLB) is used for load-balancing network/transport protocols (Layer 4 – TCP and UDP requests).

Figure 8.3 – Amazon ELB integration with VMware Cloud on AWS

Figure 8.3 illustrates that the load balancer infrastructure, including Amazon ELB, is hosted on the connected VPC on the right side, while workloads on the VMware Cloud on AWS SDDC are used as IP targets. The ELB public IP is routed by the connected VPC’s internet gateway to receive inbound traffic from users anywhere on the internet. The load balancer then distributes requests to VMs based on the IP target group configured on the elastic load balancer. Traffic between the Amazon Elastic Load Balancer (ELB) and workloads on the VMware Cloud on AWS SDDC are routed through the ENI over the NSX-T Edge T0 router before eventually reaching the VMs on the VMware Cloud SDDC.

Organizations can enhance the security of their Amazon ELB and the workloads behind it by using Amazon’s Web Application Firewall (WAF). WAF enables organizations to monitor the HTTP(S) requests forwarded to their web application resources. It can help protect websites from common attack techniques such as SQL injection and Cross-Site Scripting (XSS). Organizations can also create rules that block or rate-limit traffic from specific user agents, IP addresses, or requests with certain headers. Organizations can also enable AWS Shield on the elastic load balancer for protection against Distributed Denial of Service (DDoS) attacks.

In addition, organizations can leverage Amazon CloudFront (not depicted in Figure 8.3), a Content Delivery Network (CDN) that improves the delivery of dynamic and static web content to end users by enhancing the reliability and availability of web applications. CloudFront has copies of frequently accessed files cached at various edge locations across the globe. These edge locations comprise a global network of data centers where CloudFront delivers content. When an end user requests content served by CloudFront, the request is sent to the edge location nearest to the end user with the lowest latency.

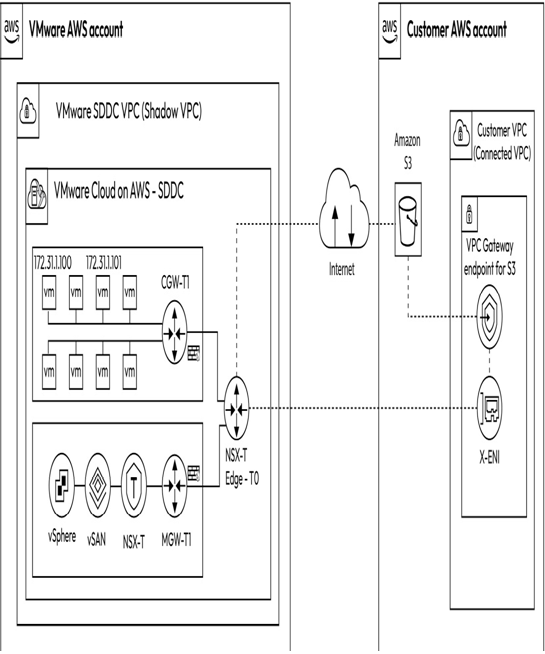

Integrating Amazon Simple Storage Service

Amazon Simple Storage Service (S3) is a cloud-based object storage service that enables organizations to store and retrieve data from anywhere at any time. Using S3, organizations can leverage cloud-native storage that offers high scalability, performance, security, and durability at a low cost to develop a wide range of applications. The following figure illustrates the integration of VMware Cloud on AWS workloads with an Amazon S3 bucket privately using a VPC gateway endpoint.

Figure 8.4 – Amazon S3 integration with VMware Cloud on AWS

Workloads running on VMware Cloud on AWS SDDCs can access public S3 buckets over the internet. However, this approach is less secure and incurs data egress costs per GB for all data accessed from the S3 bucket. Hence, using an S3 VPC gateway endpoint is recommended for organizations to access data privately from the S3 bucket without exposing it to the public internet. The S3 VPC gateway endpoint is created in the connected VPC. The workload traffic from the SDDC traverses the NSX Edge Tier-0 Logical Router through the X-ENI to the S3 VPC gateway endpoint in the connected VPC and eventually reaches the S3 bucket.